Put That in Your Pipeline and Smoke Test It!

I rarely bother to open my mouth as a speaker and step into a spotlight anymore. I've been mostly focused on observing, listening, and organizing tech communities in my local Boston area for the past few years. I just find that others'

A friend of mine asked if I would present at the local Ministry of Testing meetup, and since she did me a huge last-minute favor last month, I was more than happy to oblige.

"Testing Is Always Interesting Enough to Blog About"

The state and craft of quality (not to mention performance) engineering has changed dramatically in the past 5 years since I purposely committed to it. After wasting most of my early tech career as a developer not writing testable software, the latter part of my career as of late has been what some might consider penance to that effect.

I now work in the reliability engineering space. More specifically, I'm a Director of Customer Engineering at a company focusing on the F500. As a performance nerd, everything inherits a statistical perspective, not excluding how I view people, process, and technology. In this demographic, "maturity" models are a complex curve across dozens of teams and a history of IT decisions, not something you can pull out of an Agilista's sardine can or teach like the CMMI once thought it could.

A Presentation as Aperitif to Hive Minding

This presentation is a distillation of those experiences to date as research and mostly inspired to learn what other practitioners like me think when faced with challenges in translating the importance of holistic thinking around software quality to business leaders.

Slides: bit.ly/put-that-in-your-pipeline-2019

Like I say at the beginning of this presentation, the goal is to incite collaboration about concepts, sharing the puzzle pieces I am actively working to clarify so that the whole group can get involved with each other in a constructive manner.

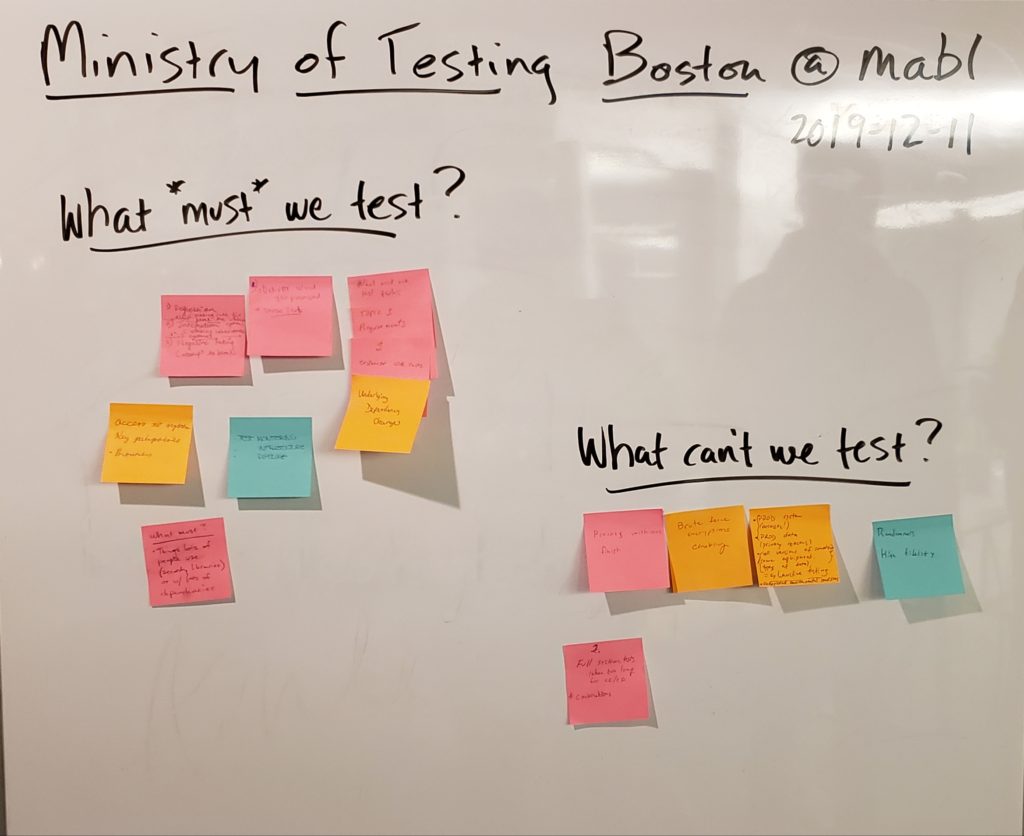

Hive Minding on What Can/Must/Shouldn't Be Tested

The phrase 'Hive Minding' is (to my knowledge and Google results) a turn-of-phrase invention of my own. It's one incremental iteration past my work and research in open spaces, emphasizing the notions of:

- Collective, aggregated collaboration

- Striking a balance between personal and real-time thinking

- Mindful, structured interactions to optimize outcomes

At this meetup, I beta launched the 1-2-4-All method from Liberating Structures that seemed to work so well when I was in France at a product strategy session last month. It so well balanced the opposite divergent and convergent modes of thinking, as discussed in The Creative Thinker's Toolkit, that I was compelled again to continue my active research into improving group facilitation.

Even after a few people had to leave the meetup early, there were still six groups of four. In France there were eight contributors, so I felt that this time I had a manageable but still scaled (4x) experiment of how hive minding works with larger groups.

My personal key learnings

Before I share some of the community feedbacks (below), I should mention what I as the organizer saw during and as outcomes after the meetup:

- I need to use a bell or chime sound on my phone rather than having to interrupt people once the timers elapse for each of the 1-2-4 sessions; I hate stopping good conversation just because there's a pre-agreed-to meeting structure.

- We were able to expose non-quality-engineer people (like SysOps and managers) to concepts new to them, such as negative testing and service virtualization; hopefully next time they're hiring a QA manager, they'll have new things to chat about

- Many people confirmed some of the hypotheses in the presentation with real-world examples; you can't test all the things, sometimes you can't even test the thing because of non-technical limitations such as unavailability of systems, budget, or failure of management to understand the impact on organizational risk

- I was able to give shout-outs to great work I've run across in my journeys, such as Resilient Coders of Boston and technical projects like Mockiato and OpenTelemetry

- Quite a few people hung out afterward to express appreciation and interest in the sushi menu of ideas in the presentation. They are why I work so hard on my research areas.

- I have to stop saying "you guys". It slipped out twice and I was internally embarrassed that this is still a latent habit. At least one-third of the attendees were women in technology and as important as being an accomplice to improving underrepresented communities (including non-binary individuals), my words need work.

A Few Community Feedbacks, Anonymized

- What must we test?

- Regressions, integrations, negative testing

- Deliver what you promised

- Requirements & customer use cases

- Underlying dependency changes

- Access to our systems

- Monitoring mechanisms

- Pipelines

- Things that lots of devs use (security libraries)

- Things with lots of dependencies

- What can't we test?

- Processes that never finish (non-deterministic, infinite streams)

- Brute-force enterprise cracking

- Production systems

- Production data (privacy concerns)

- "All" versions of something, some equipment, types of data

- Exhaustive testing

- Randomness

- High-fidelity combinations where dimensions exponentially multiply cases

- Full system tests (takes too long for CI/CD)

A few thoughts from folks in Slack (scrubbed for privacy)...

Anonymized community member:

Writing up my personal answers to @paulsbruce’s hivemind questions yesterday evening: What can/should you test?

- well specified properties of your system, of the form

if A then B. Only test those when your gut tells you they are complex enough to warrant a test, or as a preliminary step to fixing a bug, and making sure it won't get hit again (see my answer to the next question). - your monitoring and alerting pipeline. You can never test up front for everything, things will break. The least you can do is test for end failure, and polish your observability to make debugging/fixing easier.

</div> </div>

What can't/shouldn't you test?

- my answer here is a bit controversial, and a bit tongue in cheek (I'm the person writing more than 80% of the tests at my current job). You should test the least amount possible. In software, writing tests is very expensive. Tests add code, sometimes very complex code that is hard to read and hard to test in itself. This means it will quickly rot, or worse, it will prevent/keep people from modifying the software architecture or make bold moves because tests will break/become obsolete. For example, assume you tested every single detail of your current DB schema and DB behaviour. If changing the DB schema or moving to a new storage backend is "the right move" from a product standpoint, all your tests become obsolete.

- tests will often add a lot of complexity to your codebase, only for the purpose of testing. You will have to add mocking at every level. You will have to set up CICD jobs. The cost of this depends on what kind of software you write, the problem is well solved for webby/microservicy/cloudy things, much less so for custom software / desktop software / web frontends / software with complex concurrency. For example, in my current job (highly concurrent embedded firmware, everything is mocked: every state machine, every hardware component, every ocmmunication bus is mocked so that individual state machines can be tested against. This means that if you add a new hardware sensor, you end up writing over 200 lines of boilerplate just to satisfy the mocking requirements. THis can be alleviated with scaffolding tools, some clever programming language features, but there is no denying the added complexity)

To add to this, I think this is especially a problem for junior developers / developers who don't have enough experience with large scale codebases. They are either starry-eyed about TDD and "best practices" and "functional programming will save the world", and so don't exercise the right judgment on where to test and where not to test. So you end up with huge test suites that basically test that calling database.get_customer('john smith') == customer('john smith') which is pretty useless. much more useful would be logging that result.name != requested_name in the function get_customer

the first is going to be run in a mocked environment either on the dev machine, on the builder, or in a staging environment, and might not catch a race condition between writers and readers that happens under load every blue moon. the logging will, and you can alert on it. furthermore, if the bug is caught as a user bug "i tried to update the customer's name, but i got the wrong result", a developer can get the trace, and immediately figure out which function failed

Then someone else chimed in:

It sounds like you’re pitting your anecdotal experience against the entire history of the industry and all the data showing that bugs are cheaper and faster to fix when found “to the left” i.e. before production. The idea that a developer can get a trace and immediately figure out which function failed is a starry-eyed fantasy when it comes to most software and systems in production in the world today.

The original contributor then continues with:

yeah, this is personal experience, and we don't just yeet stuff into production. as far data-driven software engineering, I find mostly scientific studies to be of dubious value, meaning we're all back to personal experience. as for trace driven debugging, it's working quite well at my workplace, I can go much more into details about how these things work (I had a webinar with qt up online but I think they took it down)

as said, it's a bit tongue in cheek, but if there's no strong incentive to test something, I would say, don't. the one thing i do is keep tabs on which bugs we did fix later on, which parts of the sourcecode were affected, who fixed them, and draw conclusions from that

Sailboat Retrospective

Using the concept of a sailboat retrospective, a few things that I'd like to improve are below, namely:

Things that propel us:

- Many people said they really liked the collaborative nature of hive minding and would love to do this again because it got people to share learnings and ideas

- Reading the crowd in real-time, I could see that people were connecting with the ideas and message; there were no "bad actors" or trolls in the crowd

- Space, food, invites and social media logistics were handled well (not on me)

Things that slowed us:

- My presentation was 50+ mins, way too long for a meetup IMO. </p>

To improve this, I need to:

- Break my content and narratives up to smaller chunks, ones that I can actually stick to a 20min timeframe on. If people want to hear more, I can chain on topics.

- Recruit a timekeeper from the audience, someone who provides accountability

- Don't get into minutia and examples that bulk out my message, unless asked

- Audio/video recording and last-minute mic difficulties kind of throws speakers off

To fix this? Maybe bring my own recording and A/V gear next time.</li>

- Having to verbally interrupt people at the agree upon time-breaks in 1-2-4-All seems counter to collaborative spirit.

To improve this, possibly use a Pavlovian sound on my phone (ding, chime, etc.)</li> </ul>

Things to watch out for:

- I used the all-to-common gender-binary phrase "you guys" twice. Imagine rooms where it would somehow be fine to say that, but saying "hey ladies" to a mixed crowd would be considered pejorative to many cisgender men. Everything can be improved and this is certainly one thing I plan to be very conscious of.

- Though it's important to have people write things down themselves, not everyone's handwriting can be read back by others after, and certainly not without high-fidelity photos of the post-its afterward.

To improve this, maybe stand with the final group representatives and if needed re-write the key concepts they verbalize to the "all" group on the whiteboard next to their post-it.</li> </ul>

More reading: